Performance: Not a nice-to-have but a core requirement

Why performance should be part of your requirements + actual data on what we consider as performant and acceptable.

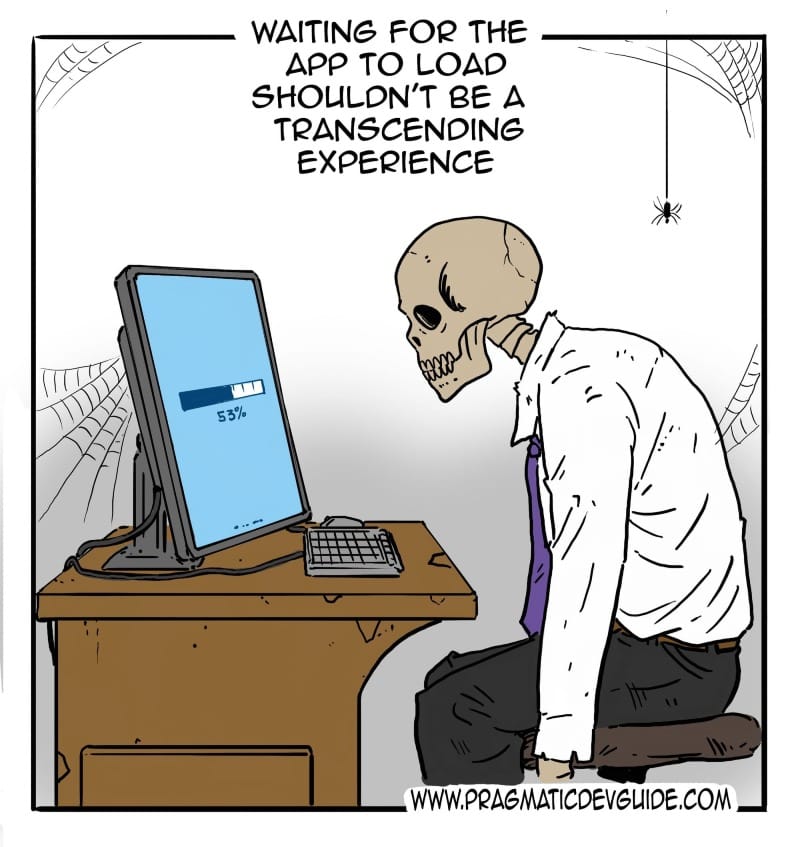

Picture this: It's a busy workday, and your employer rolls out a new email system. Every time you click to open an email, you're left staring at a loading screen for 20 seconds. This isn't just annoying, it's a nightmare. Would you stand for it?

A few years back, a company reached out for help with their internal application. They were desperate.

The development had been outsourced, and everything worked fine in the beginning. But for over a year, the application had started to act weird and became painfully slow: the main screen took nearly 30 seconds to load. Every attempt to fix it by the outsourced team made matters worse. It was a continuous frustration.

I thought it was a lost cause. I was wrong.

When do we consider something performant?

When you click a button and get a response within 100ms, it is perceived as 'instantaneous'.

A response within 1 second is acceptable. The user will feel the computer is 'working on it', but no special feedback (hourglass, progress indicator,...) is needed yet.

For delays over 1 second, you should indicate that the computer is busy. Delays like this shouldn't happen during normal use of the software.

Anything longer than 10 seconds needs a progress indicator and preferably a way for the user to interrupt the task. The user will probably switch away from the screen so assume they will need to reorient themselves when they get back. Delays like this should be the exception.

These timeframes have been the industry standard for over 40 years. (source: Nielsen Norman Group).

And it's essential to make such performance benchmarks an explicit requirement

Developers won't intentionally slow down an application, but you can't just take performance for granted.

Also, performance on the developer's powerful computer, with 1 concurrent user and minimal data is no indication of actual real-world usage. Performance can and probably will degrade with increased data and users.

But avoid premature optimization, it is the root of all evil!

(a famous quote from Donald Knuth, a renowned computer scientist, mathematician, and professor emeritus at Stanford University.)

For an existing user base, or if you have an idea of the expected traffic, you can test the performance by simulating expected usage and data. Tools exist to mimic multiple users interacting with the application or populate the database with fake, generated data.

If you're building the next Facebook, though, don't over-engineer for traffic that you don't have yet. You might be better off using the resources elsewhere, like marketing. I'd rather have a scaling problem than a performant system without users.

Beware of unnecessary snake oil solutions

When stating my performance concerns, I've had consultants trying to sell me unnecessary solutions. If you're not sure, and it sounds expensive: try to get a 2nd opinion.

For the ones with a tech background: the consultant suggested using Elasticsearch to search the data of 5000 customers.

For the ones without a tech background: that's like using a bulldozer to plant a flower. The consultant either wanted to get experience with Elasticsearch, sell extra man-days, or just didn't know what he was doing. It was a red flag. Or at least a very dark orange one.

Now, what happened to that slow application from the introduction?

When I reviewed the source code and version control, I found a stable, performant, and solid foundation. It was buried under a bunch of poor updates by clearly inexperienced developers.

Someone in the outsourcing chain had decided to go cheap, and this was becoming very expensive for the client. A slowly sinking ship. And as the customer was in the maritime industry, they hated sinking ships.

We rolled back (the closest we'll ever come to time travel) and rebuilt from the solid foundation. A far cheaper option than rebuilding from scratch.

(And that's why you should own version control from day one. And do code reviews. But those are other stories)

Conclusion

- Include performance requirements based on the expected amount of users and data. There's no harm in Including the link to the Nielsen Norman Group article as well.

- If you want to make sure the application is performant before release, get the performance tested.

- Don't optimize for traffic and load that you don't have yet.

- Beware of consultants using your performance concerns to sell unnecessary solutions.

Bridge the tech-business divide.

Learn non-tech software development skills. Quick reads, big impacts. Subscribe to stay informed.

Unsubscribe anytime.